A friend of mine last week shared with me a job opportunity for a backend developer and suggested I apply for it. However, when I went through the job recommendations, it was very unfortunate for me as it was seeking "US" locals only, so I almost skipped it. But the challenge was quite a refresher. This interview challenge was not just about technical skills, at least for me, lol; it was about rekindling the thrill of solving problems and crafting something entirely new using first programming principles, so I had to just try it out.

The project was to build a server from scratch using Node.js and PostgreSQL, with TypeScript—a rare chance to work with low-level utilities and revisit the fundamentals of software development.

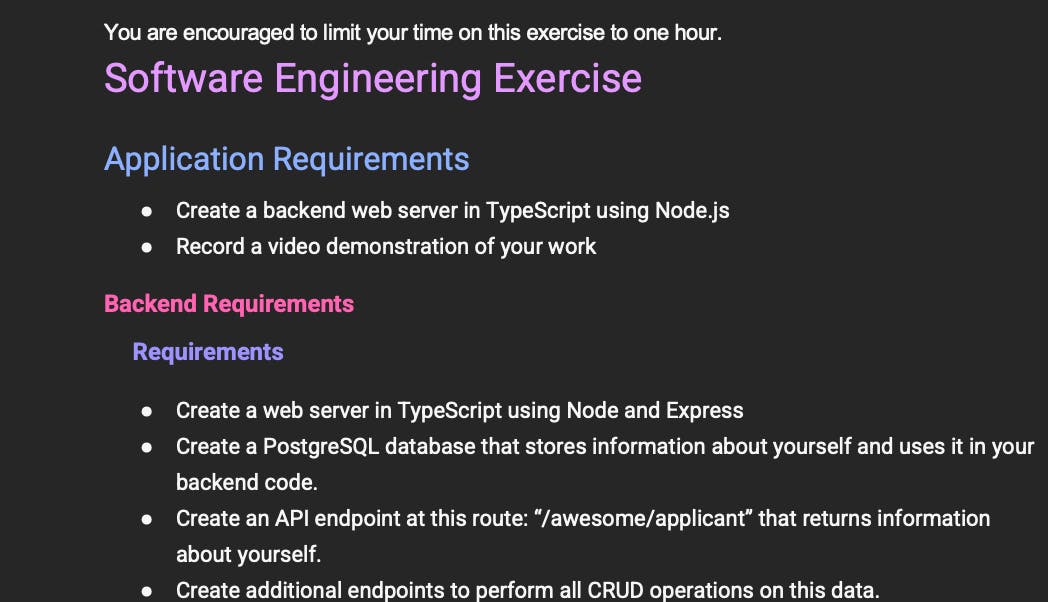

Here is the brief section from the project

So let's dig into the processes - get the code here on my github

Setting Up the Environment

Setting up a solid development environment is crucial for any project, and it was particularly important for this one where type precision was key. Here’s how I approached it:

Initializing the Environment: The first step was to create a new directory for our project to keep everything organized. Once the directory was ready, I initialized a new Node.js project. This set up the basic structure I needed to start coding.

Installing TypeScript: Since the project specifications required TypeScript, the next step was to install it along with the necessary TypeScript declarations for Node.js As shown below:

pnpm add -D typescript @types/nodeThis not only installed TypeScript but also the type definitions for Node.js, which are essential for better type checking and editor support.

Setting Up Express and Nodemon: We then need to install express to spin up the server. To handle live updates and automatic restarts during development, We must also install Nodemon.

pnpm add express pnpm add nodemonNodemon watches for changes in the source files and automatically restarts the server, which speeds up the development process immensely.

Configuring

pnpm: I prefer usingpnpmover npm or yarn because of its efficient handling of node modules through a shared symlinked repository. This not only speeds up installations but also saves disk space. To ensure that our project exclusively usedpnpm, I configured thepackage.jsonto use it for dependency management. Here’s the snippet from ourpackage.json:{ "packageManager": "pnpm" }Creating a TypeScript Configuration: A

tsconfig.jsonfile was necessary to specify the root files and the compiler options required for TypeScript. This configuration helps with managing large projects and makes the development process smoother and more manageable. Here’s a basic configuration I used:{ "compilerOptions": { "target": "es5", "module": "commonjs", "strict": true, "esModuleInterop": true, "skipLibCheck": true, "forceConsistentCasingInFileNames": true, "outDir": "./dist" }, "include": ["**/*.ts"], "exclude": ["node_modules", "dist"] }Setting Up PostgreSQL:

To integrate PostgreSQL into our project, the setup involved several steps, from installation to creating the database and tables. Here’s a detailed breakdown:

Installing PostgreSQL: I used Homebrew to install PostgreSQL:

brew install postgresqlAfter the installation, I started the PostgreSQL service using:

brew services start postgresqlCreating the Database: Once PostgreSQL was up and running, I created a new database specifically for this challenge. I chose to name the database

ChallengeDBto reflect its purpose. The command to create this database was:createdb ChallengeDBCreating the Table: With the database in place, the next step was to create a table to store applicant data. I accessed the PostgreSQL command line interface and executed the SQL command to create the table. The SQL statement looked like this:

CREATE TABLE applicants ( id SERIAL PRIMARY KEY, name VARCHAR(255), bio TEXT, experience TEXT );Let's understand the SQL for Creating the

applicantsTableThe SQL command I used to create the

applicantstable in PostgreSQL is fundamental for structuring how data will be stored and managed within the database. Here’s an explanation of each part of the command:CREATE TABLE applicants:CREATE TABLEis the command that tells PostgreSQL we want to create a new table.applicantsis the name of our table. And is how we will refer to it when inserting, updating, querying, or deleting data.

id SERIAL PRIMARY KEY:idis the name of the column.SERIALis a data type in PostgreSQL specifically designed for creating unique identifiers for the table. It is an auto-incrementing integer, which means it automatically generates a new number (incremented by 1) every time a new applicant is added.PRIMARY KEYis a constraint that ensures values in this column are unique across the table and none of them can beNULL. This is crucial as it helps maintain the integrity of the data by ensuring each row can be uniquely identified by itsid.

name VARCHAR(255):nameis the column where the applicant's name will be stored.VARCHAR(255)specifies the data type for this column.VARCHARis a variable character string. The number in parentheses,255, limits the length of the data stored here, meaning no more than 255 characters can be stored in this column. This is useful for ensuring data uniformity and optimizing storage.

bio TEXT:biois the column designed to store a biography or other textual information about the applicant.TEXTis a data type that allows you to store strings of any length, which is ideal for text that might vary significantly in length and exceed the limits ofVARCHAR.

experience TEXT:experienceis similar tobioin its usage for storing textual data. This column is intended for descriptions of the applicant’s professional experience.Using the

TEXTdata type here again allows for flexibility in the amount of text stored, accommodating everything from brief overviews to detailed narratives.

Configuring Environment Variables: To manage our database connections securely, I set up environment variables rather than hard-coding the credentials into our application code. I added the following lines to a

.envfile located at the root of my project directory:PGHOST=localhost PGUSER=myusername PGDATABASE=ChallengeDB PGPASSWORD=mypassword PGPORT=5432These environment variables (

PGHOST,PGUSER,PGDATABASE,PGPASSWORD,PGPORT) were crucial for establishing a connection to PostgreSQL from our Node.js application. They ensure that our database credentials are kept secure and make it easier to change the connection settings without modifying the code.

Building the Backend

Initializing Our Server and Database Connection

Setting Up Express and Node.js: I began by importing Express, a minimal and flexible Node.js web application framework that provides a robust set of features for web and mobile applications. This allowed us to quickly create a server by simply invoking

express().import express from "express"; // Instance of the express application. export const app: express.Application = express();Integrating PostgreSQL: Using the

pglibrary, I established a connection pool to our PostgreSQL database. A pool manages multiple database connections, keeping them open so they can be reused, which improves the performance of executing commands.import { Pool } from "pg"; const pool = new Pool({ user: process.env.PGUSER, host: process.env.PGHOST, database: process.env.PGDATABASE, password: process.env.PGPASSWORD, port: parseInt(process.env.PGPORT || "5432"), });Environment Configuration: We utilized the

dotenvlibrary to load environment variables from a.envfile intoprocess.env, ensuring our application's configuration is both secure and flexible.import "dotenv/config";

Crafting API Endpoints

Middleware to Parse JSON: It's important for our server to correctly parse incoming requests as JSON. This is effortlessly handled by Express's built-in middleware, which I enabled as follows:

app.use(express.json());Error Handling: To manage errors effectively, I implemented an asynchronous route handler. This wrapper function catches any errors that occur during the request-handling process and passes them to our error-handling middleware.

function asyncRouteHandler(fn) { return (req, res, next) => fn(req, res).catch(next); }CRUD Operations:

Create: I set up a route to add new applicants. Using a POST request, data is collected from the client, inserted into the database, and the newly created entry is returned.

app.post("/awesome/applicant", asyncRouteHandler(async (req, res) => { const { name, bio, experience } = req.body; const result = await pool.query( "INSERT INTO applicants (name, bio, experience) VALUES ($1, $2, $3) RETURNING *", [name, bio, experience] ); res.status(201).json(result.rows[0]); }));Read: A GET endpoint retrieves all applicants, allowing us to list everyone stored in our database.

app.get("/awesome/applicant", asyncRouteHandler(async (req, res) => { const dbRes = await pool.query("SELECT * FROM applicants"); res.json(dbRes.rows); }));Update: Using PATCH, this route updates an existing applicant's data based on their ID.

app.patch("/awesome/applicant/:id", asyncRouteHandler(async (req, res) => { const { id } = req.params; const { name, bio, experience } = req.body; const result = await pool.query( "UPDATE applicants SET name = $1, bio = $2, experience = $3 WHERE id = $4 RETURNING *", [name, bio, experience, id] ); if (result.rowCount === 0) { res.status(404).send("Applicant not found"); } else { res.json(result.rows[0]); } }));Delete: This endpoint removes an applicant from the database.

app.delete("/awesome/applicant/:id", asyncRouteHandler(async (req, res) => { const { id } = req.params; const result = await pool.query("DELETE FROM applicants WHERE id = $1", [id]); if (result.rowCount === 0) { res.status(404).send("Applicant not found"); } else { res.status(204).send(); } }));

General Error Handling: Finally, I added a middleware to handle any errors that weren't caught by our route-specific handlers. This catches and logs the error, and then sends a server error response to the client.

app.use((err, req, res, next) => { console.error("Error:", err); res.status(err.status || 500).send(err.message || "Server error"); });

Through these steps, we crafted a robust backend system capable of managing our database of applicants with full CRUD capabilities. This backend not only handles typical web application tasks but also ensures that errors are managed gracefully, enhancing the reliability and user experience of our application.

So, what's next? You guessed it—testing. The challenge extended the task to include testing the server's main features using Jest. Let's explore how I set up Jest and wrote a few tests.

Testing and Debugging

Testing is crucial in software development because it ensures that your application runs correctly before it gets into the hands of users. Jest is a testing framework that's easy to use while remaining powerful. It's particularly good for TypeScript applications, like ours, because it understands type-based syntax out of the box.

Setting Up Jest

Installing Jest was the first step towards doing the test - I simply ran pnpm add jest to add it to our project's development dependencies. But I didn't stop there. Since our project uses TypeScript, we also installed ts-jest and the TypeScript definitions for Jest, allowing us to write our tests in TypeScript, which Jest would understand and run.

Configuring Jest for TypeScript

I then went on to create a jest.config.js to configure Jest to work with TypeScript. This configuration file tells Jest to use ts-jest, which enables Jest to process and understand our TypeScript files.

module.exports = {

preset: 'ts-jest',

testEnvironment: 'node',

globals: {

'ts-jest': {

tsconfig: 'tsconfig.jest.json'

},

},

transform: {

'^.+\\.ts$': 'ts-jest',

},

};

I also set up a tsconfig.jest.json file to extend our existing TypeScript configuration, ensuring that Jest's TypeScript compiler options were correctly set up.

{

"extends": "./tsconfig.json",

"compilerOptions": {

"module": "commonjs",

"outDir": "./",

"noEmit": false

}

}

Writing Our Tests

For testing our Express API, I brought supertest into the mix. It's a high-level abstraction for testing HTTP, perfect for simulating GET, POST, PATCH, and DELETE requests to our server. After installing it with pnpm add supertest, I now could write tests that closely mimic how the server will be used in production.

Mocking the Database

Why mock the database? I didn’t want our tests to run against the production database, which could mess up the existing data. Instead, I mocked the PostgreSQL database using Jest. This way, our tests are fast and repeatable, unaffected by the current state of the database.

jest.mock('pg', () => ({

Pool: jest.fn(() => ({

query: jest.fn().mockImplementation((queryText, params) => {

// Mock specific SQL queries

}),

end: jest.fn(),

})),

}));

With this setup, I could write our test cases. For instance, when testing the POST route for adding a new applicant, we needed to simulate sending a JSON object to our /awesome/applicant endpoint, and then we checked the response to ensure that the status code was 201 and that the body contained the new applicant.

describe('POST /awesome/applicant', () => {

it('should create a new applicant', async () => {

// Simulate sending data

const response = await request(app)

.post('/awesome/applicant')

.send({

name: "Sara Joe",

bio: "Developer",

experience: "Entry level"

});

// Check the response

expect(response.statusCode).toBe(201);

});

});

Debugging: A Continuous Process

Debugging wasn't just a step; it was part of the process. I relied on Jest's detailed error reporting to pinpoint issues, and logging to understand the flow of our application. The console.log method was indispensable, revealing the inner workings at every step.

Every test I wrote and every bug I fixed added another layer of security, ensuring that when the project goes live, it will run smoothly for every user, every time.

Oh boy, what a refresher—I think I should be doing more of this